‘Individual science fiction stories may seem as trivial as ever to the blinder critics and philosophers of today – but the core of science fiction, its essence has become crucial to our salvation if we are to be saved at all.’ So said the Godfather of SciFi, Isaac Asimov.

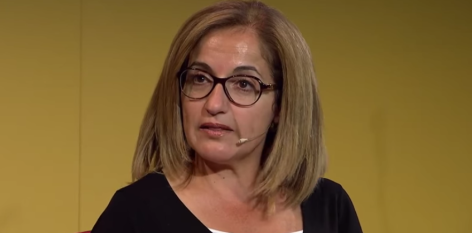

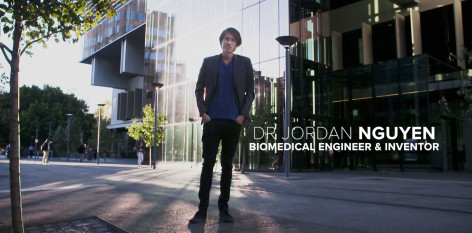

So what does biomedical engineer, would–be world changer and 2016 TEDxSydney speaker Dr Jordan Nguyen think? What role does SciFi (SF) play in the field and the lab? Here’s two coffee’s worth of Doc Jordy wading into some of his fave tech-noir and cyberpunk to talk Amanda Kaye through the ways SF informs his thinking and projects.

What’s your first love – robotics, artificial intelligence (AI) or virtual reality (VR)? And did SF inspire that first love?

My first love is definitely robotics and AI, probably more because of my life experiences. I remember at a very early age, playing checkers against one of my Dad’s AI robots. Dad built quite a few. I beat this one only a couple of times because it learned very quickly how to block me. It was really exciting to see this AI robot learn and develop intelligence.

Around the same age I saw the film Short Circuit which explores what happens when a military robot with AI is struck by lightning and starts to learn like a human. That film was pretty amazing at showing what AI and robotics can do. Johnny Five was an influence for sure.

My love of VR came later, playing Halo and recognising the potential of this technology to create empathy.

Science fiction is a genre that trades in fear and anxiety. So much of it features the archetype of the mad scientist, the over-reacher who tips discovery into chaos.

Yeah, unfortunately there’s a lot of gloom and doom in SF. That’s what makes it into a lot of films, which is what scares the crap out of people when they start to think about these advancements.

What keeps you from becoming a mad scientist?

To some extent I think it’s about motivation.

At one point I thought I wasn’t cut out for this field – I almost failed a key subject at uni and I was thinking about quitting. I scraped a pass when they scaled the raw mark – it was touch and go. And not long after, I had an accident where I almost broke my neck diving in a pool. That was a real turning point for me.

I started learning about disability, and the people I was meeting inspired me to think differently. These were people who had spinal injuries or stroke, or high-level cerebral palsy. And what I found consistently was that these people – people with locked-in syndrome – they can’t move, can’t speak, they have to communicate through their eyes – these amazing, resilient people were more positive than anyone I knew. I want to do whatever I can to advance research that’s going to support their independence and quality of life. They inspire me and give me a purpose.

Where do you get your sense of boundaries?

For me boundaries are again about my driving force. Every technology that we develop – everything that has some sort of disrupting nature to it – has positives and negatives to it. The greatest advancements have been going into the military, but we need to be focussing on creating advancements that benefit humanity.

At one time I tried to draw up an artificial intelligence that could be curious. I stopped that project. I didn’t have a good reason to do it apart from marvelling at the fact I could do it. I didn’t know how it could help, and I could see some potential dangers in it.

But the lines are blurry and they are blurring further.

Loads of Science Fiction explores the tensions in the relationship between humans and machines. Is the divide and difference between man and technology narrowing or becoming greater?

There’s no doubt that we are headed for the territory of the technological singularity, the idea that an AI one day will be on par with every respect of the human brain.

First, we’d have to let ourselves achieve that, and right now the biggest minds in the AI space are saying, let’s not get there. Not yet. The big question is, where is the point where we stop? We don’t have an agreement about where to draw the line.

Where it gets dangerous is when robots/AI are allowed to roam free and think for themselves and build a lot of data. That’s where the ambiguities lie – when they reach the conclusion that human beings are getting in their way. This is what the film The Matrix is about.

In the Matrix the AI does its thing, does its analysis as it’s been programmed to do, and sees that humans are a plague overtaking every corner of the globe, consuming resources, multiplying and expanding, creating pollution and destroying the planet. The robots rise, making war against the humans who become enslaved as batteries to support them. And then the humans are forced to live out their life in a virtual world. The intelligence didn’t need the humans anymore, and so logically enough, the Matrix decided to eradicate us.

Isn’t this why we need Isaac Asimov’s Three Laws of Robotics? (The Handbook of Robotics, 56th Edition, is published in 2058 A.D.)

We humans live in a world where we have made up laws that allow us to be governed and to live together without social collapse. We assume this will work when you program a robot to follow a law, but this is exactly where this comes unstuck. Once it gets to this point – when you get even close to the technological singularity – true AI is unpredictable. It won’t be governed by Asimov’s laws or any others, it will come up with its own laws and own rules.

Does the contemporary scientific community have some kind of document or code of ethics to govern artificial intelligence?

Not in the same way, and maybe we should. But the laws of robotics could be challenged. Asimov’s stories and the Will Smith film, I, Robot explored the loopholes.

The first law of robotics is 1: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

But what if the robot unintentionally harms people? I built a robot that would stop moving if something was in front of her, but she was so thin and tall if she stopped too suddenly she’d topple over and head butt whoever was in her path. Just like humans, robots can bring harm to other humans without realising it.

In a way then, disobeying or falling short of Asimov’s Laws makes the robots even more like us?

That’s right.

So for you, what’s the relationship between science and SF?

I look to SF all the time. Scientists almost chase SF, because it puts these ideas into our heads. People often say about some technology that SF ‘predicted it’, but it’s not really prediction. SF has some great minds behind it who take a ‘what-if’ question and put together these great concepts that think through many different trends, come up with a potential concept, put it into our heads and then we start chasing it. Science fiction almost briefs scientists about what to do next.

The other big value of SF is that it allows us to think through the potential consequences of our actions. I want to show what we are capable of, to get people to think about it, talk about it, look at all the sides to the debate as we move into the future. It’s super important to think ahead, not just react to technology as it arrives.

How is the R&D into robots and AI informed by science fiction?

A lot of SF works through concepts – the writers are basically futurists. They think forward a whole bunch of steps – here’s a potential future that we could actually face if we are not careful, or if we keep on this trend, or if we try this out. They envision this future and bring it to life through stories. They don’t need the science to be there yet to explore the consequences. We don’t need to know how the flux capacitor works to understand the dilemmas faced by Marty McFly in Back to the Future.

Then when there’s enough details in the SF we really do chase down a new technology. Like the iconic 3D touch screen from Minority Report that Tom Cruise manipulates at Pre-Crime. That tech didn’t exist when the film was made, and now it does. This has become reality in around six different ways.

SciFi is cool because it inspires us. Those who can create sometimes don’t have the vision, and those who have the vision sometimes can’t create. What’s going on is a partnership between the arts and the sciences. And it’s fruitful.

To go back to The Matrix though, in that film man and machine are very much separate, the division is great, but as we move into the future what’s most likely to happen is a blurring of the lines between man and machine. A fusion of biology and technology.

Like in Ridley Scott’s Blade Runner? The AI-robots are ‘more human than human.’ The detective Deckard and the rogue cyborg Roy are more human than Tyrell, who is made of flesh and bone, but has become more corporation than human being. The robots are the ones who have a capacity for empathy. Can empathy be taught to robots/AI?

I think so, an artificial intelligence can model almost anything.

The massive challenge we’ll face is when AI appears to become empathetic, appears to become human in some way – the big part will be when it can completely trick us.

Will we end up using the Voight-Kampff test?

I think a test like this is pretty likely.

I’m remembering Leon being asked,‘You’re in the desert, there’s a tortoise on it’s back..You’re not helping Leon, why is that?’

That test is trying to elicit an empathetic reaction. It’s going to be difficult to model, but we will get to a point where machines can do this – exhibit empathy, and self-awareness, have emotional responses. And then we face the big question of Blade Runner – who has the right to say these machines aren’t human?

Yeah, Deckard and Roy are sentient beings capable of empathy, love, fear and bravery. So when these robots have every quality that we consider human, and in more depth and concentration than the humans in the story, how do we decide what ‘human’ means?

Right. I believe we are at a point where very soon we are going to have to redefine what it means to be human. Soon we will be able to create a virtual version of ourselves, still be human as we know it but live in a virtual world and never age. Why does human identity need to rely on a biological system?

If our computers ran on flesh and blood and more of a biological operating system the lines would be even more blurred. Right now machines look like machines, so we draw a line in the sand. When machines don’t look like machines, things will have to change. And at that point a lot of people will be talking about robot rights.

It might be the final equality battle we have to fight for.

I believe that moving into the future we will have intelligent machines, we’ll have ‘pure’ humans and then we’ll have the in-between, the transhumans. This is the rise of transhumanism.

Transhumans are the cyborgs, and technically we’ve got plenty already. Bionic organs, pacemakers, bionic limbs – anyone with any one of these qualifies. But you could have three bionic limbs and some other bionic organ, and people won’t call you a cyborg. As we keep becoming more and more integrated with our technology, the line shifts. We are going to get that point where it’s used not just to restore human functionality. People are going to want to start shifting parts in and out, to augment their athleticism and abilities.

By integrating with the AI we will be in better position to keep control of it, because it will be a part of us. The more transhuman we are, the lower the risk of being overtaken by a race of robot overlords.

Later still we’ll get to the post-human era, when we are able to completely leave behind our own biology. That might take a number of different forms but we will no longer be human as we know it.

Blade Runner is a story about empathy as much as anything – Deckard’s for Rachel, Roy’s for Deckard – and you believe that VR can be a powerful tool for provoking empathy.

There’s no other means or method that we have to put ourselves properly in the head and point of view of another person. VR means you can literally see through their eyes and standing in their shoes.

I just came from walking around a life-size 3D copy of a guy who’s singing. I could see the texture of his clothing, and I was able to stand exactly where he’s standing and look down and see his body as if it was mine. His Hawaiian shirt and his tattoos became my shirt, my tattoos. It’s an incredibly powerful tool for empathy.

You’ve set up a social enterprise called Psykinetic to develop tech focussing on intelligent futuristic and inclusive tech for people with disabilities. What SF novel or film most informs the work you do there and the approach you take?

Superhero movies inspired a lot of the thought-controlled design. Especially Professor X from X Men. What he does is telekinesis – that’s pretty much what TIM the wheelchair does. And Iron Man does what I do – he designs things and tests them in an attempt to solve problems. Like him we look at how the blurring of tech and biology can improve someone’s life.

My documentary Becoming Superhuman follows this thinking, and tracks the process of the Psykinetic Team to solve a problem. Inspired by a young mate of mine, Riley, who’s got cerebral palsy, the Psykinetic team takes trends in tech to project forward and design ways to improve quality of life for people with disability. From there we look at how those technologies might be branched out to be useful to everyone else.

What SF prediction would you most like to realise in the future?

I’d like to crack sustainable immortality, not just immortality where we live longer and overpopulate the earth but sustainable immortality where we are able to thrive and survive and not have that massive footprint and impact on the earth. Maybe this is something that will happen in the post-human era. What’s certain is we are going to have to redefine what it is to be human if we want to survive as a species.

You tell a lot of stories yourself – why are stories important to a scientist?

Storytelling doesn’t mean making things up, it’s just the way you frame information.

I used to give presentations full of facts and times and data points and no one cared, not even me. But as soon as I started throwing in stories about the people who influenced the project and inspired it, it became interesting to my audience and to me. It started a real engagement with people.

The way I talk about what I do is in stories. Human existence has advanced to where it is today because of our storytelling abilities. It’s so important to pass on our knowledge and experiences. We don’t just absorb data and information unless it’s framed in a story which makes that data meaningful and valuable. Stories spark human connection, the thing that at least for now, still divides humans and robots. Stories are why people listen.

Watch Jordan’s TEDxSydney 2016 Talk – Technology Is Reinventing Humanity